Publishers Assert AI Model Training Requires Licensing, Not Fair Use

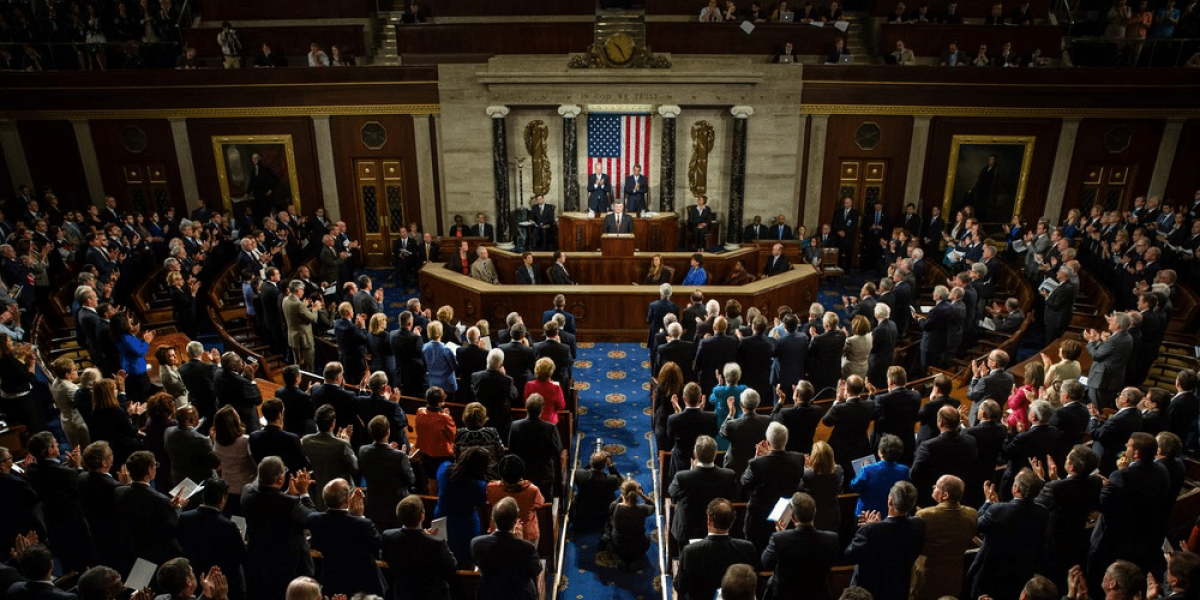

In a recent session held in the US Congress, the impact of AI on journalism took center stage. Participants, primarily publishers, emphasized that training an AI model with existing content should not be considered fair use under American copyright law.

Roger Lynch, CEO of Conde Nast, a prominent magazine publisher, conveyed a straightforward message during the Congressional hearing. Lynch proposed that if the US Congress explicitly states that training a generative AI model with copyrighted works requires a license, the free market can effectively address other challenges AI poses in the media and entertainment industries.

“We believe that a legislative fix can be simple,” Lynch stated. He urged lawmakers to clarify that using copyrighted content with commercial AI is not fair use and necessitates a license.

While some tech companies argue that AI training falls under fair use or existing exceptions in copyright systems, Lynch emphasized that fair use is designed for specific purposes such as criticism, parody, scholarship, research, and news reporting. He insisted that it should only serve to enrich technology companies with proper compensation and highlighted that adverse effects on the market for copyrighted material negate the fair use claim.

Lynch also challenged the notion that obtaining permission to use copyrighted content is impractical, citing examples from the music industry where rights organizations efficiently license content. He expressed confidence that the free market can generate efficient licensing solutions once AI companies acknowledge the need for licensing.

The stance presented by Lynch raises questions about the expansive view of fair use by AI companies, as discussed by committee member Josh Hawley. Concerns were raised about the potential erosion of copyright law if the broad interpretation of fair use prevails.

‘No AI FRAUD Act’ Introduced to Protect Against AI-Generated Deepfakes

A bipartisan group of House Representatives, led by Democrat Rep. Madeleine Dean and Republican Rep. Maria Salazar, introduced the ‘No AI FRAUD Act’ in the US House of Representatives. The bill aims to safeguard individuals from the unauthorized use of their image and voice in AI-generated deepfakes.

This legislation establishes a federal-level “right of publicity,” protecting against the unauthorized use of a person’s likeness, voice, or identity. The proposed law allows individuals to seek monetary damages for harmful, unauthorized uses of their likeness or voice. Specifically, it addresses concerns related to sexually exploitative deepfakes and child sexual abuse material.

The bill balances individual rights and the First Amendment, safeguarding speech and innovation. It addresses the rising threat of AI-generated deepfakes that manipulate voices and images for deceptive purposes.

Prominent figures in the music industry, including Recording Industry Association of America (RIAA) Chairman and CEO Mitch Glazier, have applauded the introduction of the ‘No AI FRAUD Act.’ The legislation is seen as a crucial step in protecting the intellectual property of artists and creators from potential exploitation.

Universal Music Group (UMG) Chairman and CEO Sir Lucian Grainge strongly supported the proposed legislation, emphasizing the need to prevent the unauthorized use of someone else’s image, likeness, or voice. UMG, a leading music rightsholder, has been actively advocating for a federal right of publicity as AI capabilities continue to advance.

The ‘No AI FRAUD Act’ has garnered broad support from the Human Artistry Campaign, a coalition advocating for protecting human culture and artistry in the face of AI advancements.

Balancing AI Innovation and Individual Rights

While the introduction of the ‘No AI FRAUD Act’ is lauded for its efforts to protect individuals from AI-generated deepfakes, some critics argue that legislation may inadvertently stifle innovation in the AI space. Balancing the rights of individuals against the need for technological advancement poses a challenge, and finding a middle ground is crucial.

Summary

In conclusion, the call for clarity on AI training guidelines in Congress reflects the ongoing debate over fair use and licensing in evolving technology. Simultaneously, introducing the ‘No AI FRAUD Act’ signifies a proactive approach to address the potential misuse of AI-generated deepfakes. As these discussions unfold, finding a delicate balance between fostering AI innovation and protecting individual rights emerges as a critical consideration for policymakers.